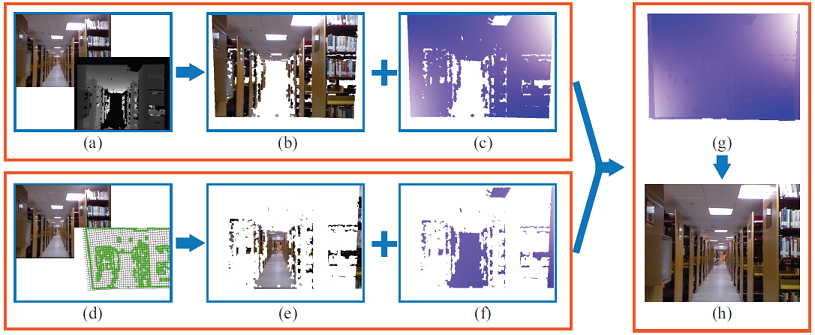

Frame generation pipeline:

We use the color and depth images in (a) to generate the projection in (b) and the motion field in(c). Many pixels aremissing because of the incomplete depth image. Hence, we warp the color image by the ‘content-preserving’ warping in (d) according to the green control points and a regular grid. This warping generate a color image (e) and a motion field (f). We then generate a complete motion field (g) by combining (c) and (f). The final video frame (h) is created by warp the original frame with (g).

Results: